Please Touch Me. Not!

Jun 1, 2021

In this Readers’ Platform, your author describes HMI systems for touchless operation, including those from his Germany-headquartered company.

by Rudolf Sosnowsky

Do you know the theremin? It’s an almost forgotten musical instrument with a special feature: you can play it without touching it. Exactly that — the touchless operation of elevators — is made possible by the solutions presented here. Triggering actions without touching is becoming ever more achievable: opening the trunk of the car with your hands full, answering a phone call while doing the dishes or just pushing the less-than-appetizing door handle in the public restroom.

The “no free hands” situation also exists in the professional world. Maybe your hands are in protective gloves because you handle dangerous substances, or you are not allowed to touch anything with your hands for hygienic and health reasons. There are appropriate regulations for this: In the food industry, gloves must be worn a lot. In the medical sector, where sterility is required, nothing non-sterile may be touched without changing gloves afterward.

The current situation draws interest to the operation of equipment that does not require direct physical contact. According to a study by UltraLeap, a manufacturer of touchless interfaces, the acceptance of touchscreens has declined. Assuming a certain level of complexity (i.e., not that of the proximity sensor of a soap dispenser), there are various options for this, which are presented here.

Challenges and Proposals

Non-contact operation of devices and machines is driven by current precautionary measures, the requirement for sterility of operators and materials in medical technology and operation — even in protective clothing. These technologies are at the forefront of interaction without direct contact between operator and equipment:

- New technology infrared touchscreen

- “Flying touchscreen” (remote touchscreen)

- Holographic touch display

- HY-gienic touchscreen

- 3D touchscreen with gesture control

- Offline voice control

There are other technologies that are either still in their infancy or irrelevant to the applications presented here.

Infrared Technology

Infrared (IR) touchscreen technology is well known. A curtain of IR light is interrupted by an object introduced into the beam path; this interruption is evaluated and returned to the controller as a touch event. The touchscreen needs not be located directly above the screen, but can also be mounted at some distance. This is particularly advantageous for devices that are at risk of vandalism, such as vending machines and ATMs, as the screen can be protected from mechanical impact by a strong glass pane. For applications that expect optimal optical reproduction, such as medical imaging or prepress, the glass panel can be omitted. In principle, the IR touchscreen also works without an underlying display, so it could also be mounted in front of a printed plate. Its benign electromagnetic compatibility makes it a robust input medium.

The drawback is the parallax caused by the distance between the sensor and image plane; standard infrared touchscreens do not support multitouch operation and gesture recognition. They must be protected from extraneous light with IR filters, and are easy to sabotage, e.g., with a piece of chewing gum. The remedy is the new generation of IR touchscreens, which, instead of interrupting a light grid, evaluates the reflection of an object in the beam path.

By cleverly arranging emitting laser and receiving photo diodes, two touch events and gestures can be detected simultaneously in the light field. The reflection principle allows mounting on only one side of the housing, which is very advantageous for integration. An intelligent scanning algorithm prevents simple sabotage, as described above. A high sampling frequency of 200 Hz ensures detection without delay. Optimized optics with narrow light aperture provides high ambient light resistance. A wide temperature range from -40°C to +80°C guarantees use in all applications. Examples include industrial plants, agricultural machinery and special vehicles, large medical equipment and lifestyle applications, such as modern kitchen and home furniture. Figure 1 shows a cross-section of the operating principle.

“Flying Touch” – Detached Touch Screen

Based on the principle of the IR touchscreen, the Flying Touch is very similar. The difference is that the IR sensor is mounted like a curtain (see Figure 2) at a distance from the surface. The touch event is detected whenever an object crosses the light path. It is not necessary to touch the underlying surface. It is at the discretion of the user to stop finger movement before fully touching the surface. The functionality is the same as with the IR touchscreen.

Holographic Touch Display

The new generation IR touchscreen plays an important role in touch-free operation. The system consists of two parts: the touchscreen, which detects touch events and gestures with an invisible IR curtain, and an image that is projected holographically into the air. In the physical sense, the display of the virtual image is not a hologram, since it does not use monochromatic, coherent light, nor does the image change depending on the viewing angle. Rather, a special material property is used here that convergently bundles the diffusely emerging light rays at the location of the virtual image, thus creating the impression of a free-floating representation. The term “holography” is used for this.

3D Projection

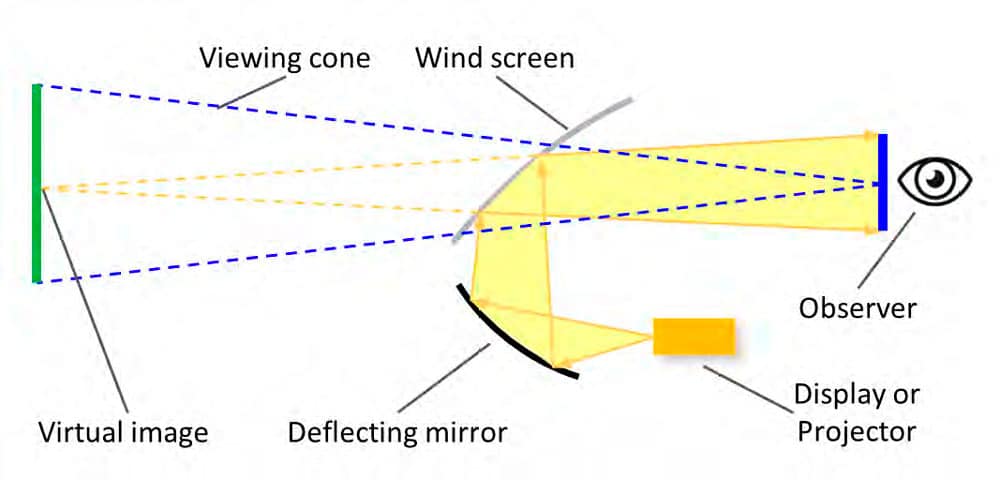

The holographic image appears standing freely in the air. This is caused by an optical plate that deflects the image generated by the source and projects it according to the laws of optics. Similar to a head-up display in a car (see Figure 3), the driver sees an image through the windshield that displays additional information such as speed, traffic signs and navigation instructions in augmented reality. The driver’s attention remains focused forward on the road and the virtual image. The remote display relieves strain on the driver’s eyes, which do not have to accommodate between near (looking at the instrument panel) and far (looking at the road). The image itself is generated by a display or projector in the footwell and projected through a lens unit onto the windshield, which has a partially reflective coating in this area.

In the present system, the setup looks very similar. The 3D plate generates a virtual image that is “tangibly” close to the user. The virtual image is generated at the point that has the same distance to the mirror as the display itself (see Figure 4). If one now mounts an IR sensor instead of the image plane, which overlooks the image surface, one can detect and evaluate touch events and gestures drawn “in the air” without having to touch any parts.

Since there is a fixed angular relationship among the display, projection plate and final image, the installation geometry of the display and the projection plate determines the orientation of the virtual image. Figure 5 shows two common installation situations of a projection system. A vertical image is created by a horizontally mounted display and 45-degree plate, while a horizontal projection plate creates a 45-degree tilted image.

Applications

Places and devices that are used by many people are predestined for contactless applications. Digital signage in retail, e.g., menu selection in fast-food restaurants, manages without direct contact, as do orientation and information boards in shopping centers. Elevator controls, both for call buttons on the floor and for floor selection in the car, are obvious.

HY-gienic Touchscreen

The HY-gienic touchscreen is based on the fact that UV-C radiation has a killing effect on germs and viruses. This is a pro and a con: UV-C radiation does kill unwanted cultures, but it is also harmful to organisms. Irradiation of the human body or parts of the body should be avoided at all costs. Since it is invisible, extreme caution must be exercised. Effectiveness depends on the dose, which is the intensity multiplied by the exposure time. If a touchscreen surface is to remain hygienically clean for each user, it must be cleaned before the next user approaches the terminal. Since the exposure time is considerable, continuous operation is difficult to achieve. In addition, continuous operation should not use consumables, and the technology should be able to withstand many touches without wear.

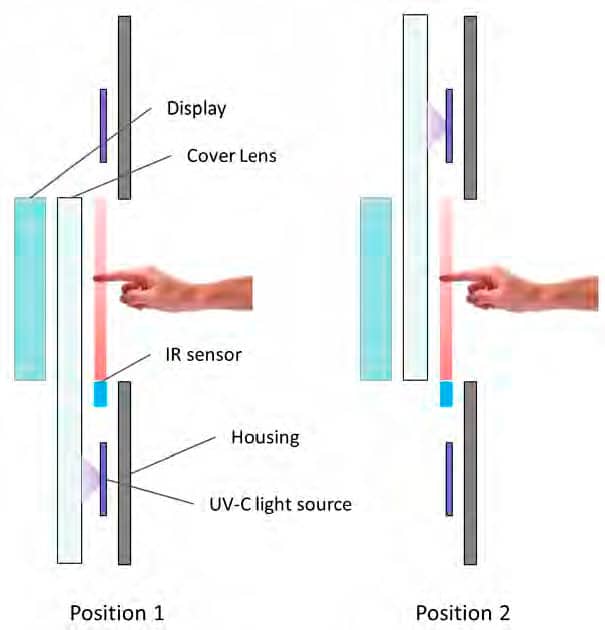

The idea behind the proposed solution is alternate operation and disinfecting exposure, and no interruption during operation. For a “wear-free” solution, it is desirable to avoid contact between the operator and the surface for the function itself. This requirement can be solved by implementing an IR touchscreen for the touch function. This should be mounted at a distance from the cover glass so that it does not need to be touched. If it does happen, the cover glass must be disinfected. The requirement for the terminal to be available at all times and cleaned before the next user can be solved by a cover glass that is moved to a cleaning station after each user. If the cover glass provides twice the size of the required viewing area, one portion can be covered and disinfected while the user operates the visible portion. Also see Figure 6.

How Disinfection Works

The cover glass is twice as large as the active display area. So while one part is accessible for operation, the second part is covered and decontaminated in the UV range. Since the IR sensor is mounted in a plane in front of the cover glass, no physical contact between the operator and the cover glass is required. After the operator completes the procedure, the open, potentially contaminated portion of the coverslip is moved to the UV region on the opposite side. A clinical trial is underway and a patent application has been filed for this technology.

Applications

There is a wide range of applications. Target markets are public terminals such as vending machines, points of sale, ATMs, check-in/check-out terminals in hotels and airports and human-machine interfaces in healthcare systems.

3D Gesture Control

In contrast to the qualitative control of a computer-aided design, or CAD, system, only qualitative control is possible when controlling human-machine interface (HMI) via gestures. This does not require absolute coordinates, but relative positions.

The control can be used to wake the device from standby mode when approached, and quasi-analog settings such as volume can be made with a rotary motion. Horizontal wiping is interpreted as a scrolling motion, and the static position can also trigger timed events as known from touchscreens with touch: “Hover” displays further information or triggers an event, e.g., by pressing the right mouse button.

Operation Principle

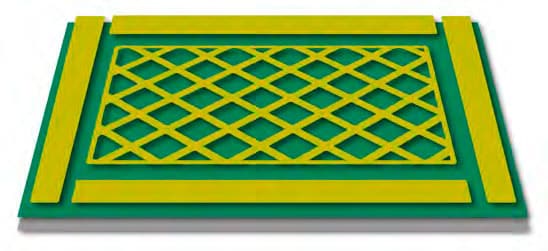

A field is stretched between two electrodes of a capacitor. In the case of the 3D Touch, this is aligned so that it protrudes outward (i.e., toward the operator/viewer). During calibration, the capacitance of this capacitor is measured as a reference. Every object that enters the field influences the field lines, thus, the capacitance between the two electrodes (see Figure 7). The measurement procedure evaluates the change and converts it into a distance of the object both from each of the two electrodes and from both together. The first evaluation gives a position between the two electrodes, and the second points into the third dimension. If a second pair of electrodes is arranged orthogonally to the first, the position in the other axis can be determined in the same way.

As elegant and simple as the described method sounds in theory, implementation is complex in practice. Measured field strengths vary depending on external influences, be it temperature, humidity or mechanical tolerances, within a range of devices. The touch controller uses AI methods to infer the original signal by evaluating the measured values. Accordingly, the recognition of gestures works very similarly to speech and handwriting. The high degree of integration of the IC (integrated circuit or microchip) makes it easy to use for the user, who does not have to deal with the theoretical background. Figure 8 shows how the electrodes for a 3D touch system are constructed.

Applications

3D touch technology can be used wherever gestures are to be recognized in front of the touchscreen, and resolution and accuracy are not important. In many applications, the 3D touchscreen is supported by an underlying two-dimensional one that determines the position in the x/y plane. The big advantage of the technology is that gestures can be performed “blindly” without looking at the touchscreen. In situations such as in a motor vehicle, this contributes to traffic safety. A swipe gesture can be used to select the next CD track or radio station, while a circular motion controls the volume. Feedback is provided by the operator’s ear, not by the eye.

In addition to consumer products such as notebooks and audio (e.g., Bluetooth® headphones), the smart home also plays a role. For white goods, air-conditioning and blind controls or light switches, the third dimension can be used to activate functions. Instead of aiming a finger precisely at the sensor field, the rough movement of a hand in front of the sensor is sufficient to trigger the “default” function, which, for example, switches off all lights when the user leaves the room.

In the medical field, 3D technology can facilitate the operation of medical equipment because actions can be performed without contact and the operator remains sterile. As an example, consider a surgical light where position, brightness and color can be adjusted without touch.

3D touch technology can also be used without a display. The field is strong enough to penetrate wooden tabletops or kitchen countertops, which opens up interesting fields of application.

Voice Control

Especially where no hand is free or clean enough to operate a control element, or where the eyes cannot be averted from the object currently being viewed (such as during an operation or working under a microscope or magnifying glass), voice-based control demonstrates its advantages. Not to be underestimated is the ability to enter commands and parameters simultaneously with a single sentence, rather than shuffling through the menu in the graphical user interface (GUI) to set functions and values individually. All commands and settings found in a traditional GUI can be activated simultaneously from the “main” level. This is accompanied by a significant increase in efficiency.

Basically, a distinction is made between online and offline voice control. Devices that tend to be networked online with others, (such as consumer electronics, home automation and media control), can control other devices through networking. Other devices that are self-sufficient take advantage of offline operation: function is guaranteed even in areas without network coverage, data is secure due to the privacy-by-design approach and the fixed installation guarantees long availability independent of the internet provider.

Voice control is also becoming interesting for capital goods in industry, as it enables further operating variants and increased flexibility. Due to the long service life of a machine and the increased need for safety in production, the potential user has further requirements: The voice input solution must be available over a long period of time and be expandable, as needed. The activation word, also called “wake word,” must be freely selectable. Many international languages must be available, one of which is selected during installation or maintenance.

Applications

Voice control speeds up complex operating tasks by combining commands and parameters in one step. Support can be contextual, which is especially important for augmented reality. Data retrieval in expert systems is simplified, and digital assistants and collaboration tools optimize workflows.

One aspect is also in-process logging. Certain routine tasks can be performed without the hand of an operator, and the machine can automatically check the completeness of the log. This plays a special role in quality assurance documentation during machine maintenance or aircraft inspection. The findings can be entered directly into the system, which enters the data directly into the correct place in the log, regardless of the sequence.

Designing with Voice Control

With the help of a web-based development environment, only a few steps are necessary to define a system for your own application. The speech dialog, i.e., the activation word that establishes the system’s attention to input the permissible commands and their parameters, are compiled in the web tool as text input (see Figure 9). The first processing step already takes place during input: Graphemes, i.e., the input characters, are converted into phonemes, i.e., the smallest acoustic components of speech.

Once all words are defined, machine learning and AI-based algorithms are used to translate the defined language resources into a statistical and a semantic model for download. The result is downloaded to the target platform and launched. Then the network plug can be pulled; the final product runs autonomously. The flow in the finished application is shown in Figure 10.

Runtime resource requirements are moderate. Therefore, the system is also suitable for collaborative integration, where voice control runs in parallel with the application software.

Applications

Applications for offline voice control include household and kitchen appliances, ordering machines in fast-food restaurants, information columns in shopping and travel centers, in test and measurement technology and in the sterile environment of medical technology.

Other Technologies

In addition to the technologies mentioned above, there are other technologies that do not require direct contact with surfaces.

Eye Tracking

Eye tracking evaluates the movement of the pupils and eyelids to control the cursor and trigger actions by clicking = blinking. For the inexperienced user, the operation is rather difficult. The rigid focus of the eyes leads to fatigue, so this input method is feasible for only a limited time.

Micro-Radar

A radar can be used to detect and evaluate the position of objects within its field of view. This technology is not yet of any significance for the operation of equipment and machines.

LiDAR/ToF

Light detection and ranging (LiDAR) is based on a light field that can be used to detect objects and gestures spatially. The resolution is higher than with radar. The time-of-flight (ToF) method measures the time it takes for light emitted by a transmitter to reach the receiver after being reflected by an object. Applications for this include cell phones (camera distance measurement, face recognition) and vehicles (autonomous driving).

BCI

Known as brain computer interface (BCI), the direct interface “from brain to computer” evaluates brain waves to translate electrical nerve currents as intended actions into movements of a robot. Applications include exoskeletons that assist people in physical activities or prosthetic limbs that replace or support their missing functions. The technology is not yet mature, as AI software is needed to properly interpret the sensed voltages.

Summary

For operating a device without direct touch, one of many options can be selected depending on the application, installation location and user group. The remote infrared touchscreen of new technology, such as the holographic touch display, allows precise positioning of the input, while the 3D touchscreen with gesture control is suitable for more qualitative input (“more/less”). If the hands are not available, offline voice control opens up an alternative to conventional GUI.

Figure 1: Operating principle of the infrared sensor

Figure 3: head-up display in a vehicle

Figure 4: Operating principle of the holographic display

Figure 5: The arrangement of the display and the holographic plate determine the orientation of the image.

Figure 6: Continuous operation and disinfection

Figure 7: Path of field lines for 3D touch in open position and when disturbed by finger

Figure 8: Electrode arrangement for 3D touch

Figure 9: Designing a voice dialog

Figure 10: Offline voice control within an application

References

[1] hy-line-group.com

[2] neonode.com

[3] microchip.com

[4] voiceinterconnect.de

Get more of Elevator World. Sign up for our free e-newsletter.