Choosing the right evaluation methods

You wouldn’t measure your weight with a ruler, would you? So, before you start collecting information about the impact of your training, you have to ensure that you have the right measures and methods for the job. Critically, you may also need to define where you are — your datum — before any training is undertaken.

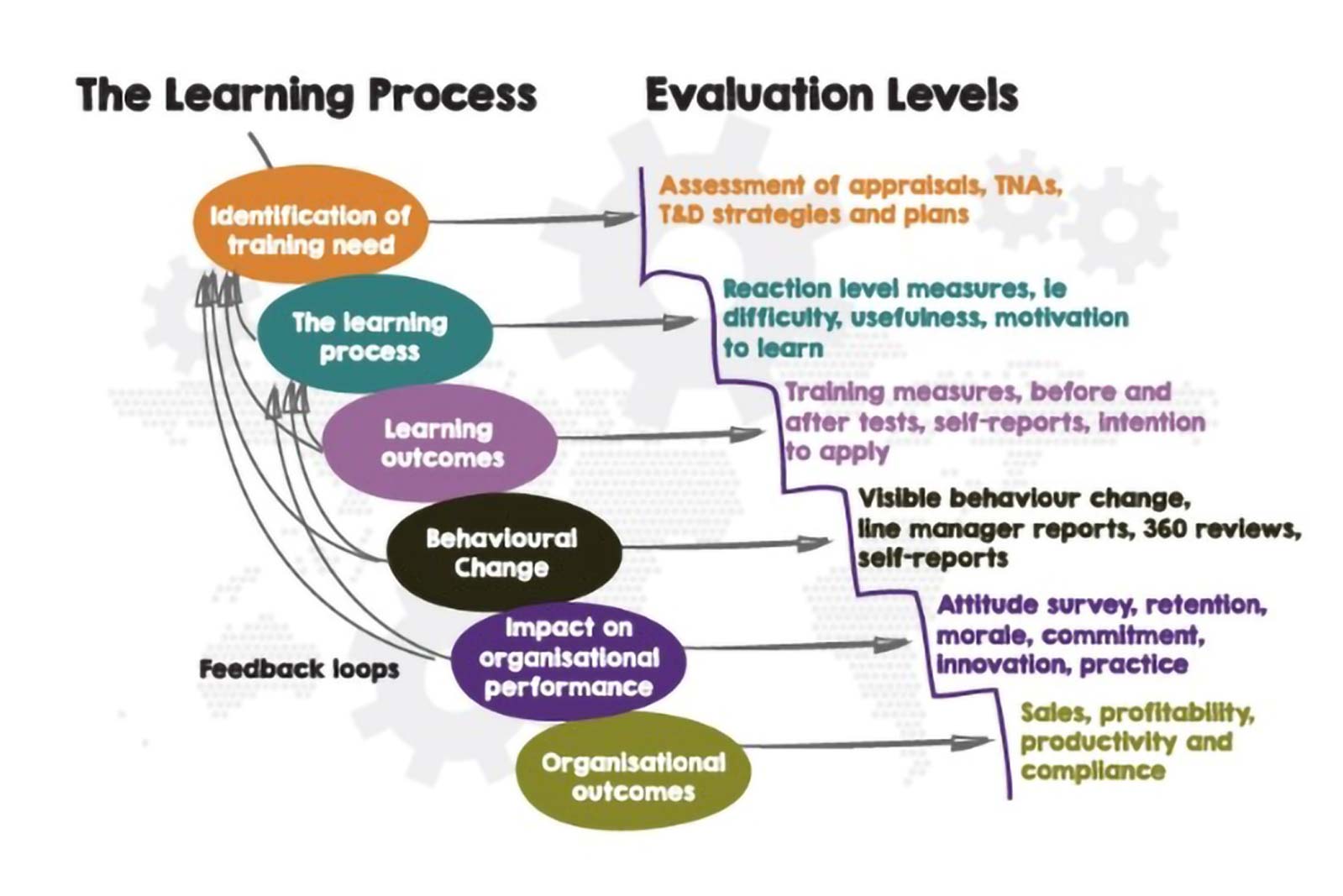

In the previous edition, we looked at training evaluation models. In this edition, we are going to explore some of the most common evaluation methods, and in some instances, it is both useful and beneficial to use more than one.

The different methods of evaluation include:

Observation

Observing is (obviously … duh …) the process of watching people as they undertake a task, process or engage in some form of activity. Results are usually recorded in some form of journal which lists the details of performance both before and after the training. This type of evaluation will be familiar to anybody who’s undertaken an NVQ (National Vocational Qualification).

There are a number of advantages to using observation as a training evaluation tool. You can use existing staff resources (but you don’t have to) — usually the individual’s manager or mentor. These guys (and gals) are likely to be close at hand, know the people, know their capabilities and can easily observe any learning and behaviour changes at the “coal-face” in the workplace setting. As a result, it actually costs zip, nothing more than the observer’s time. It also tends to be more accurate than self-report questionnaires, which can be biased (incidentally, as can anyone!) or influenced by poor memory.

Oh … and just in case you are thinking otherwise, “observation” does not have to adopt an old school stopwatch and clipboard approach. It can simply entail listening to a recording of a call, going to a meeting with someone or a supervisor dropping in to check out the work of an engineer on a site visit. We are certainly not advocating trench coats, spotlights and interrogation techniques!

However, there are some downsides. First, you need to find someone objective and knowledgeable with enough time on their hands to watch people for as long as it takes. There is also the risk that behaviour will change simply because they know they’re being “watched.”

So, observation is often the best tool, especially when behaviour changes aren’t easy to measure quantitatively. Empathy, creativity and honesty would all be tough to “measure” on the job. You’d be far better observing someone during a meeting or two.

Exams, Assessments and Tests

Exams, assessments and tests, sometimes undertaken before and after training, are a great way to assess changes in knowledge and skills. Exams, assessments and tests obviously come in a range of shapes and sizes: formal exams and qualifications, written tests and assignments. All of which can be time-consuming to undertake and grade, but luckily, increasingly online learning management systems (LMS) can manage these tasks for you in an increasingly fun and interactive manner.

But, depending on your view of exams, assessments and tests, potentially, the best part about them is that you can measure a specific skill or knowledge area without the distraction of being observed. For example, you could measure a sales rep’s understanding of a new product with a few multiple-choice questions completed in a private and quiet environment. Additionally, you only need to create the tests once, and if you place it on an LMS, that’s it, job done: 1) you don’t have to invest any more time, and 2) you can track results for as long as the product is sold and the test is useful.

The downside? There are probably a couple of biggies:

- Exams, assessments and tests don’t usually measure knowledge and skills in the workplace, that is, where the knowledge and skills will be used … and (most times) you really want to know if the learning can be applied.

- Exams, assessments and tests are not always the best measure of soft-skills qualities like persuasion, empathy, assertiveness and listening, which are possibly better assessed by observation.

Surveys — Training Evaluation Questionnaires

A survey, or training evaluation questionnaire, collects data through a series of questions, often in the form of multiple choice. The upside is that surveys are quick, easy and efficient:

- They can be designed in a short time and sent to millions in an instant.

- Again, they can be made part of an LMS that automatically creates reminders, reports and updates of who has done what and what’s not been done.

The downside is that not many of us do not like them. How often do you simply press delete or file surveys in the bin? It’s therefore critically important to explain to staff that the surveys help the providers improve the training delivered, and that you really do want to hear their feedback to get some view of what’s actually been learnt.

Also, because a survey usually asks for perceptions and opinions, as opposed to hard data, they are often best suited to measuring how successful the learning experience was, rather than how it will be applied.

Interviews

Interviews are essentially “live questionnaires” in slightly more of a freeform style. They can be face-to-face or virtual. They’re usually at least as effective as questionnaires because you can both ask questions and also answer them and deep dive into any interesting responses. Additionally, interviews generally elicit more valuable and detailed information from employees than questionnaires.

However, the upside flexibility brings a downside, too. First, interviews are usually conducted one to one, which means they are conducted separately and take considerable time for both parties. Second, if a large number of people are being trained, the evaluation of the overall program might take some considerable time. There is also an opportunity cost in undertaking interviews — both parties could be doing other things. Finally, if there are different interviewers, it can become difficult to compare and summarise results, especially if some interviewers have gone “off piste” and deviated from the brief.

Focus Groups

Essentially, a focus group is a carefully facilitated discussion with a small group of people who have all completed the same training. It’s a great tool for exploring what people think and feel about the training delivered and from which to get feedback for future improvements.

Focus groups have an advantage over interviews as they are far less time-consuming; you can question a number of people at the same time. Additionally, a group dialogue can often also lead to a wider and deeper conversation about topics that might not have arisen as part of one-to-one consultations.

This makes focus groups a particularly effective way to unpack obstacles to training success, and to explore ideas for improvement. Just watch out for group conflict or any other dynamics that could derail your ability to gather constructive information about training.

Performance Records

The whole idea of training, training needs analysis and training evaluation is to consistently and systematically improve performance that benefits the business. So, if training doesn’t improve business performance in some way, it ain’t worth doing!

As a result, performance records should be a critical part of any training evaluation. The performance records you choose to use will depend on the training being undertaken. Some common examples are, for sales: calls made, deals closed, conversion rates, revenue generated; for engineers: they might include jobs completed, missed visits, return visits, accurate timesheet completion and the quality of service sheets. The list is very long.

The big advantage of performance records is that they:

- Should be based on numbers which align to the company objectives

- Should be readily and easily available

- Are not opinions!

Performance records should be free from bias and a trusted source of information by which to judge success.

The only downside when it comes to performance records is that they sometimes create more questions than they answer. Yup, performance data shows you where a problem exists, but not necessarily why it exists. So, to get to the bottom of “why,” you may need to leverage more qualitative tools, like interviews or focus groups.

They also presuppose that training is the only thing to impact the reason the results have changed; they don’t easily take account of a sudden shift in market sentiment or that a competitor has recently gone pop sending both more work and more A-grade staff your way just after you’ve completed training sessions on sales and marketing and developing better recruitment processes!

Conclusion

As was noted in the last column, the most critical components of training evaluation are to establish what you want to teach and the evaluation criteria for doing so at the beginning of the training initiative.

The above will then inform both the evaluation criteria and evaluation methods that are best suited to the endeavour, not forgetting that it might be useful to employ more than one method so if most respondents rate the learning experience poorly on the questionnaire, interviews might ascertain why. Alternatively, if they rate the learning experience highly but don’t improve on-the-job performance, you could use interviews to identify the reasons for the discrepancy.

Get more of Elevator World. Sign up for our free e-newsletter.